I want a way to merge the elements of one array into another (adding them in at the end) that's at least as fast as Array.concat. It does not need to be elegant or easy to understand.

I have spent a while looking and it appears that this is impossible. But maybe there's something I'm missing?

Resolves NO if no one provides such a way before close.

Update 2025-10-09 (PST) (AI summary of creator comment): The merged result must remain an Array type. Reimplementing a bespoke data structure that changes the type is not acceptable.

Update 2025-10-12 (PST) (AI summary of creator comment): Solutions that substitute a different task (even if related) are not acceptable. The solution must actually perform the specific task of concatenating one array into another as described, not solve a different problem.

Update 2025-10-12 (PST) (AI summary of creator comment): The solution should work in both Chromium browsers and Firefox.

Update 2025-10-12 (PST) (AI summary of creator comment): Performance requirement on Firefox: Solutions only need to roughly match the performance of other methods (not necessarily beat concat) on Firefox.

Array type requirement: The solution must use actual JavaScript Arrays, not custom data structures or classes that mimic array behavior.

Array size context: The arrays being concatenated can range from 0 to 100,000 elements.

Update 2025-10-12 (PST) (AI summary of creator comment): Reference validity requirement: The solution must maintain valid references (the creator indicates this is a common and necessary requirement for the use case).

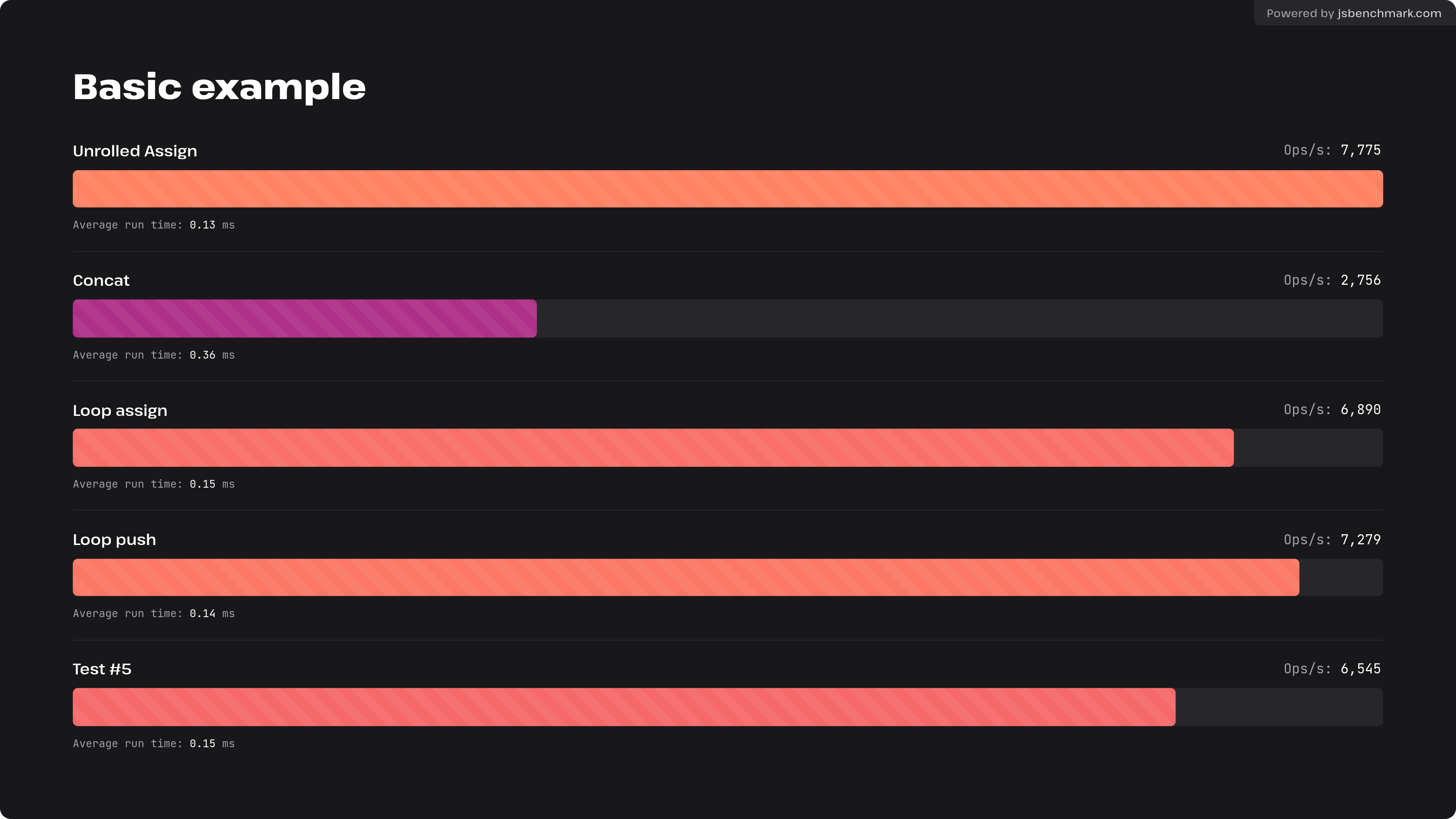

On Firefox, @AshDorsey's https://jsbm.dev/w5FUEaK7v6raH shows that

.concatis a lot slower than all other methods!If I gave you a class that supported all the vanilla Array APIs, and you could do

array[index], etc. on it, would that count?This seems a bit like an XY problem. What problem requires merging elements of one array into another without using concat?

What length are the arrays that you adding typically?

my benchmark assumes b.length < a.length, also given that these benchmarks were previously provided and deemed that concat was still fastest, i assumed chromium

you can use Object.setPrototypeOf, which allows most things, although writing doesn't work. You can however make a proxy object that also proxies read/writes, but this likely will be slower for most other array operations

Interesting. Well then on Firefox it just needs to roughly match the other methods.

I think I have to say no, since otherwise it's trivial to make this resolve YES and there's no question at hand.

Mostly I just don't like the complexity of designing my own data structures, and was hoping I could use a default array.

In this case they can be anywhere from 0 - 100,000 elements.

@IsaacKing regarding 1., doesn't this resolve YES then since simple .push outperforms .concat? Or do you mean that the solution has to match concat on Firefox and Chromium?

I meant "why don't you just use

.concat?" not why you can't use a data structure.

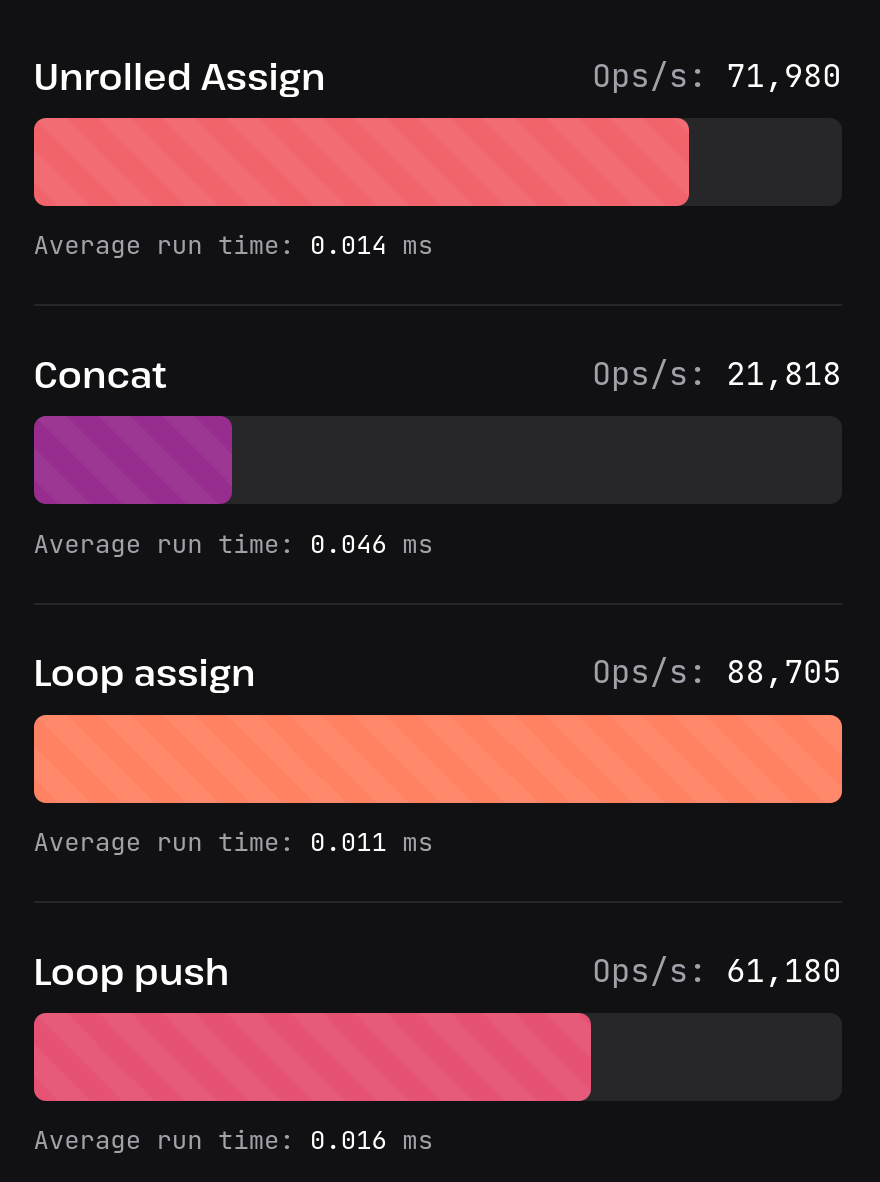

@IsaacKing wait hold on. I would have thought that intuitively, concat is slower anyways since it creates a third array, and it turns it this is true if you do more appends. If you rerun @AshDorsey's benchmarks with 10 appends per run, concat is slower than the alternatives:

(this was on chromium; results are similar on firefox.)

since im assuming you are doing more than one concat, does this count?

@calour your benchmark makes concat do 10x more work, btw. Fixing it does not mean that it is fastest again, though.

@AshDorsey wdym? it makes concat do 10x more work, and everything else also has to dp 10x more work (where "work" ∝"number of appends")

@AshDorsey Uh, what did you have in mind? I would say probably no; I could certainly reimplement my own bespoke datastructure to solve this problem, but that's a lot more effort.

@IsaacKing You could override the prototype of a and redirect index/all function calls to instead operate on a c kept alive via lambda that is the result of c = a.concat(b). This is cursed as hell and has many downsides, but it should be just as fast as concat.

@AshDorsey This doesn't actually appear to work. Kinda suprising, but oh well. If you did have a trivial wrapper over a this could have been possible, but there's really no point at that point, since you could just assign to the a in the object at that point.

https://jsbm.dev/w5FUEaK7v6raH

This is the best I could do after many hours of trying. I do not think there is a way to match concat.

@AshDorsey Neat idea! I had noticed that unrolling loops and object cloning gave a dramatic speed increase. But I hadn't considered this sort of partial unrolling to still allow for it to work on variable inputs. Clever!

@AshDorsey

lmao on chromium, in that benchmark, with those exact sizes, this is the fastest I could come up with: 30% faster than the unrolled approach, but still way slower than concat

The bottleneck appears to be that in the chromium javascript engine, resizing an array is much, much slower than allocating it at a given size. As a result, my approach of copying A to a temp array, deleting a and then rebuilding it in place by setting its length to 0 and then to the final length, and the copying a and b back to the new memory; is faster.

https://jsbm.dev/vcnSaDwleZnXl

Edit: this effect appears to be version specific: it doesn't reproduce on chrome on my macbook :(

@HastingsGreer That also doesn't reproduce on chromium mobile (Pixel 9). It's half as fast as the unrolled version. (Personally, I've been testing on Chrome Dev on an M2 Mac Mini and on my Pixel 9 Chrome Stable)

Is your concern with concat the way that it becomes O(N^2) if you build a long array by repeatedly concating short arrays? This function lazyConcat is a lil fucked up, but should be a drop in replacement for concat, but without the O(N^2) behavior

https://claude.ai/public/artifacts/7372c0ce-0b57-4327-8396-b636e0c55de3

```

class LazyConcatArray {

constructor(initialArray = []) {

this._baseArray = [...initialArray];

this._pendingArrays = [];

this._isFlattened = true;

}

concat(...arrays) {

this._pendingArrays.push(...arrays);

this._isFlattened = false;

return this;

}

flatten() {

if (!this.isFlattened && this._pendingArrays.length > 0) {

this._baseArray = this._baseArray.concat(...this._pendingArrays);

this._pendingArrays = [];

this._isFlattened = true;

}

}

get(index) {

this._flatten();

return this._baseArray[index];

}

set(index, value) {

this._flatten();

this._baseArray[index] = value;

}

get length() {

this._flatten();

return this._baseArray.length;

}

toArray() {

this._flatten();

return [...this._baseArray];

}

static create(initialArray = []) {

const instance = new LazyConcatArray(initialArray);

return new Proxy(instance, {

get(target, prop) {

const index = Number(prop);

if (Number.isInteger(index) && index >= 0) {

return target.get(index);

}

return target[prop];

},

set(target, prop, value) {

const index = Number(prop);

if (Number.isInteger(index) && index >= 0) {

target.set(index, value);

return true;

}

target[prop] = value;

return true;

}

});

}

}

function lazyConcat(arr1, arr2) {

const isLazy = (obj) => obj instanceof LazyConcatArray ||

(obj && obj._baseArray !== undefined && obj._pendingArrays !== undefined);

const lazy1 = isLazy(arr1);

const lazy2 = isLazy(arr2);

if (lazy1 && lazy2) {

const result = LazyConcatArray.create(arr1._baseArray);

result._pendingArrays = [...arr1._pendingArrays];

result._isFlattened = arr1._isFlattened;

result.concat(arr2._baseArray);

if (arr2._pendingArrays.length > 0) {

result.concat(...arr2._pendingArrays);

}

return result;

} else if (lazy1) {

const result = LazyConcatArray.create(arr1._baseArray);

result._pendingArrays = [...arr1._pendingArrays];

result._isFlattened = arr1._isFlattened;

result.concat(arr2);

return result;

} else if (lazy2) {

const result = LazyConcatArray.create(arr1);

result.concat(arr2._baseArray);

if (arr2._pendingArrays.length > 0) {

result.concat(...arr2._pendingArrays);

}

return result;

} else {

return LazyConcatArray.create(arr1).concat(arr2);

}

}

```

@HastingsGreer In classic AI fashion, it did not even understand the task, instead substituting in a totally different, easier, more common task.

@IsaacKing alas, it is I who musunderstood the task. I thought this was about the classic n^2 concatenation performance bug. sorry!

Why do you specifically need to modify the array in place rather than creating a new one?

If the reason is so that other places that reference the array will automatically be updated, may I suggest the following structure?

Create an "ArrayReference" class that contains a single attribute, the array

Instead of passing around raw arrays, pass around ArrayReferences. When you need to operate on the underlying array, access it through the ArrayReference attribute.

When you want to "mutably" concat to the array, just do

array_ref.array = Array.concat(array_ref.array, other_array).

That way the changes will be reflected everywhere without needing to actually modify an array in-place.

I think this doesn't technically solve the criteria required for the market but maybe it solves your underlying issue?

@A I agree this is a decent practical solution, but it's inelegant and only necessary due to Javascript limitations. I was hoping there'd be some way to do it "properly".

(Also for long-running tasks this is not quite as good a solution, because leaving unused arrays lying around will waste time on the garbage collector. But probably not very much.)